The Initial Approach

“The original remote controller for music is the conductor’s baton”(Roads, 1996).

Whether or not it is accurate, Curtis Roads´ statement prompts the question: What if you do not use a baton? And I found myself asking an additional question: But what if we were to take the remote control and develop it even further? By which I mean develop a system where the conductor can using physical bodily movements or gestures, remotely control the acoustic sound, the digitally processed timbre of that sound, and the sonic spectrum.

This opens a floodgate of possibilities for integrating the orchestral conductor´s capacity to control the overall flow of the “whole” musical performance. Instead of having a cue-man and/or sound engineer in the middle of the hall triggering and mixing the digital sounds to the analog sounds of the orchestra, the conductor now has the opportunity to extend her conducting expressions and interpretation in an unmediated way.

The ConDiS project aims to extend compositional possibilities, the role of the conductor, and the performance experience. Emphasis is placed on changing as little as possible of the traditional task of writing on the part of the composer or the conductor’s traditional conducting gestures. Therefore the notes, dynamics, and duration of the instrumental parts are written in the traditional manner in the form of a musical score.

Special notation or instructions are added to provide expanded opportunities for composing the live electronic sounds as if they were an actual extension of the ensemble of instruments. This allows me to treat the sonority and dynamics of the electronic part in a way similar to the traditional written score. It also enables the conductor to interpret the score she reads with more precision while still maintaining the flexibility of live performance, expression, and dynamics, allowing the whole to flow in time and space. In other words, this system creates the opportunity to conduct and control the live electronic sounds and treat them as though they were actual instruments.

Why Interactive Conducting?

The ConDiS concept crystallized during a conversation with conductor Cathrine Winnes following a concert in honor of the 100thbirth of composer John Cage. We expressed our mutual interest in the idea of creating a conducting tool that would enable conductors to conduct electronic sounds. Cathrine was therefore my choice for conductor from the beginning, and this project was initially developed for her specifically. When I started to organize the Nordic Tour dates with Trondheim Sinfonietta, it became clear that Cathrine was fully booked. I therefore asked conductor Halldis Rønning, whom I had seen conducting Trondheim Sinfonietta at a concert in Trondheim, to step in to replace Cathrine.

Conducting is an extraordinary form of conversation in which a person stands in front of a group of people and communicates without producing any sound themselves. Conducting is based on a form of sign language we might refer to as conducting gestures, a language learned through years of practice. In addition to gestures primarily involving the hands, the conductor adds other bodily movements, facial expressions, and eye contact. To characterize this implicit information I will use the term “exformation,” which was introduced by Tor Nørretranders in his book The User Illusion: Cutting Consciousness Down to Size(Nørretranders, 1999). It was also later used by composer Jesper Nordin as a title for his mixed music composition, Exformation Trilogy. The term is perhaps best described by Baptiste Bacot and François-Xavier Féron in their paper “The Creative Process of Sculpting the Air by Jesper Nordin: Conceiving and Performing a Concerto for Conductor with Live Electronics”:

Exformation relates to the fact that when people are communicating, they are sharing an implicit knowledge, which is taken into consideration tacitly in the comprehension of the words. For instance, facial expressions, tone of voice, and the gestures of each speaker give away a body of information, although it remains implied. Exformation is ‘everything around the information, everything you are not saying’ (PI 2), that is to say, an important part of human interaction. (Bacot & Féron, 2016)

Motivation

I have gradually realized over the course of the research process that the motivations and concept behind ConDiS have much deeper roots than I had imagined. This discovery (revelation) has, over time, become an important part of the research process, as there is no progress without background. In other words: “No one escapes his origins.” Familiarity with my background as a composer is important in understanding the need and motivation behind the development of ConDiS – Conducting Digital System project. To know and understand where the roots lie and the path the shoots took to the surface.

Turning Point

Attending a lecture given by the American composer and electronic music pioneer Morton Subotnick,[1]who was speaking about his visions for the future of music and music technology, instantly altered my own vision of future developments in contemporary classical music. I saw how it would fuse and extend its limitless limits with digital technology; how one could see and hear a French horn player play his horn and then how that sound would gradually morph into a totally different sonority that would travel through sonic and physical space. This was in 1988 and these ideas seemed still to be light years away, but it was a vision that had a tremendous impact on me as a then young composer.

A few years later I witnessed Theremin performer Natasha Theremin[2]and computer pioneer Max Mathews[3]performing Sergei Rachmaninov´s Vocalise on the original Theremin and Radio Baton instruments.

This historic concert was held outdoors at Stanford University’s Frost Amphitheater on September 27, 1991, as part of Stanford’s Centennial Celebrations.

I remember Natasha gracefully moving her arms to create such expressive and wonderful sounds on the legendary Theremin instrument, while Max Mathews played his Radio Baton instrument by drawing various gestures on a relatively small rectangular table. These unforgettable events marked a turning point in my professional career as a composer: preliminary thoughts about interactive performance were born that would later develop into full-fledged multimedia performances including dance and video.[4] Real-time interactive live musical performance was its birthright, with ever faster computers and the introduction of MIDI and Max object-oriented programming.

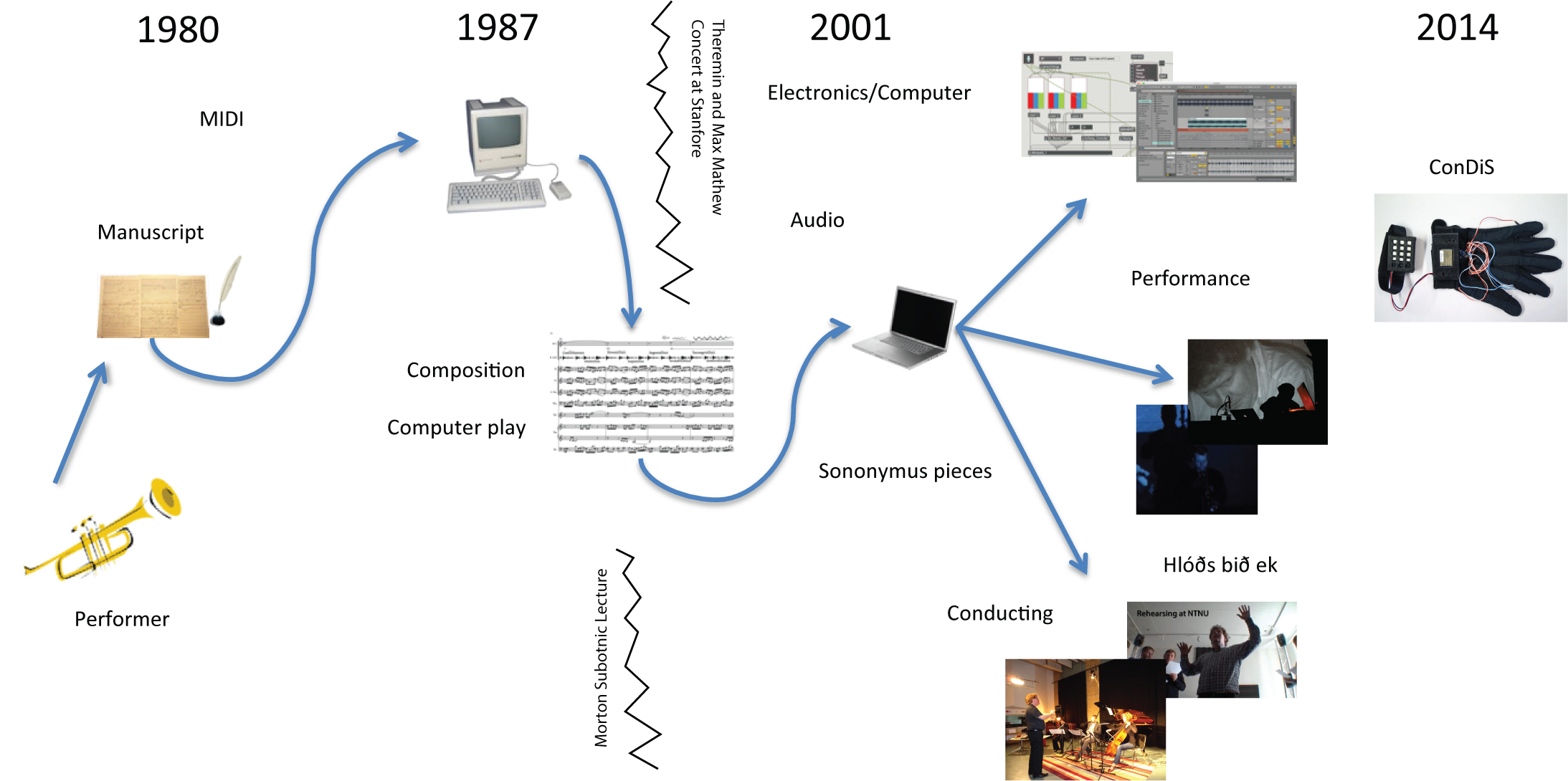

Figure6. The historical path to ConDiS

The Path to Interactive Conductor

The following is a brief overview of my path to ConDiS, written to clarify how my personal needs and musical interests gradually led to the idea of ConDiS. In this, I use examples from my compositions to explain how the need I experienced revealed itself in parallel with technological developments and, consequently, enhanced possibilities for performing live interactive mixed music. I explore how these increased capabilities result in the need to write mixed music for larger musical groups. This then increases the need for a musical solution to connect and synchronize the control of electronic and acoustic sounds.

Early Interactive Compositions

Computer Play (1988)

In 1988, I wrote my first interactive music, named Computer Play, for Viola, Piano, and “interactive” computer. At the time, the Macintosh SE system had just been introduced with more horsepower than its predecessor, extending possibilities for interactive performance.

Written using the software “Professional Composer,”[5]the computer would play a musical score in real-time with the live instrumental performance. To synchronize the playing of the live performers and the computer, the composer conducted while sitting on a chair on stage, keeping the tempo-synchronized with conducting gestures while watching a metric clock on the computer screen.[6]

Experience and Results

The interactivity deployed in the performance of Computer Play was somewhat primitive, but the components of future development were already there. The need for interactive conducting was obvious. It was the technology that was limited, or at least too limited for my musical intention.

Although it was possible during the performance to synchronize the performers and the computer by conducting, the conducting process was robotic. The conductor had to follow the computer’s internal clock without any ability to interact with or alter it once the performance had started. What I had wanted was more musical expression, more rubato,[7]more on-the-spot reaction, more musical interaction between the acoustic live performance and the digitalized sound and performance of the computer. These interactive performance expressions, however, were not available, or at least not available to me. It was perhaps a bit too early for full real-time interactivity, but one could sense something in the air. It was not until 1992, as a composer-in-residence at CNMAT,[8]that I made my next serious attempt at composing music for live mixed music media.

Crossover Sonic Art

Goblins from the Land of Ice (1993-95)

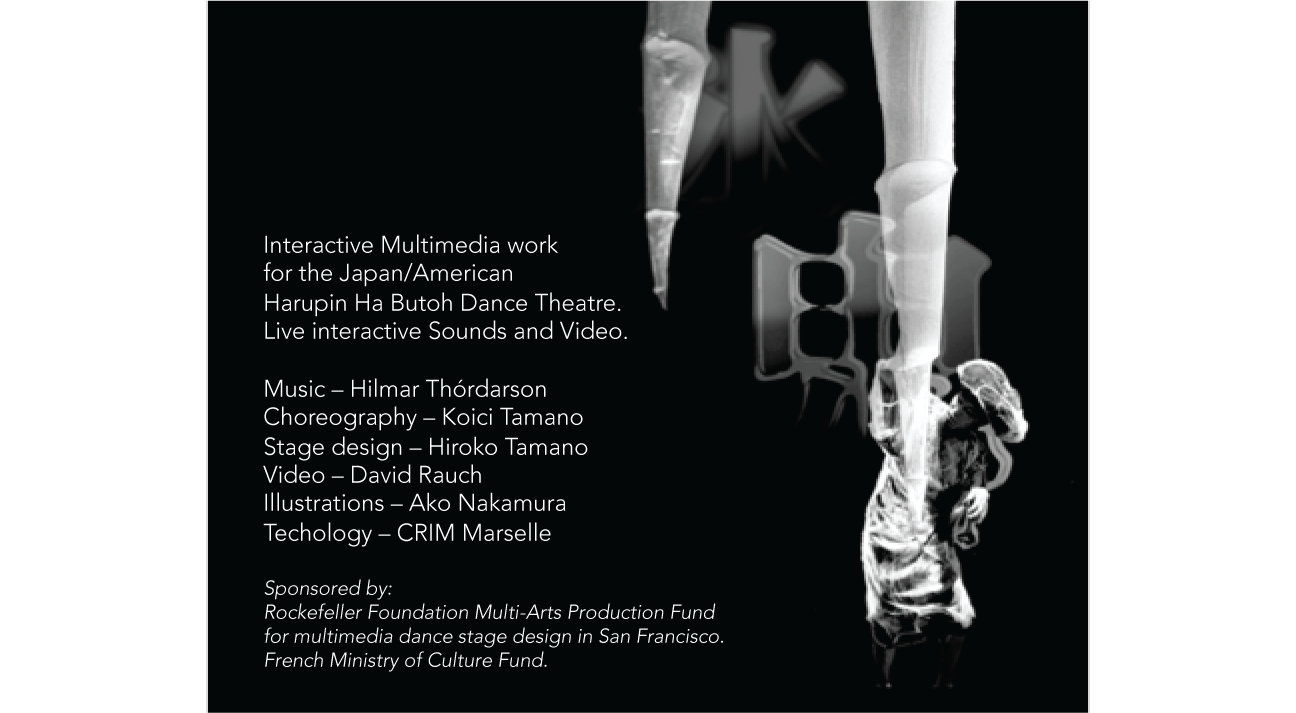

The multimedia work Goblins from the Land of Ice was written for the Japanese/American Butoh[9]dance company Harupin-Ha.[10]It is based on figures from Icelandic folklore, the so-called Yule Lads.[11]Here I used, for the first time, interactive media to control and trigger sounds and video. Dancers on stage would trigger light sensors with their body movements, creating a variety of sequences of music and video clips. As their gestures shifted in dance, the rhythm and playback speed would change accordingly. The sonic material was based on recordings from volcanic eruptions and hot springs in Iceland. As the Butoh dancers slowly moved around making their unique body gestures, sounds of running lava, muddy hot springs, and geysers were triggered.

This was an adventure, a new dimension, a crossover between the field of written contemporary music and sonic art. Given my background as a classically trained composer, most of my music had been in the field of experimental instrumental music of the mid-twentieth-century European tradition. But I felt an increased ambition to fulfill my own musical desires. It was in the—at that time—newly developed capabilities of sensor technology and the possibilities these offered for composing and performing live interactive music[12]that I found my path. Since that time, interactivity between sound, gestures, and live performance have played an important part in my compositional creation and work.

Experience and Results

Goblins from the Land of Ice was an international collaboration that including light sensor technology developed at two research centers: CRIM (Création et Recherche en Informatique Musicale) and CNRS (Le Centre National de la Recherche Scientifique). Creating Goblins from the Land of Ice was a very challenging task, not only technically, but also artistically. It was a meeting point of different fields of art and cultures. The experience of staging Goblinsprovided me with a valuable lesson about the danger of giving other art forms an opportunity to play with your music live on stage. Especially if you as a composer intend specific meanings and are not ready to seek musical compromise.

Interactive Compositions for Solo Instruments

Sononymus for Flute (1998-2000)

In my first Sononymuspiece, “Sononymus for Flute,”[13]the electronic part was pre-recorded on a CD as an accompaniment or interplay with the flute. All the electronic sounds were composed beforehand in the music studio using the sound of the flute as the main sonic source. Although it was a successful composition and one of the most frequently played from among the Sononymussequence, I was not fully satisfied. I felt constrained, I felt the musician was constrained, and I felt the music was constrained. Why?

I felt constrained because I had no way to synchronize the flute part and the CD-recorded part. In an attempt to keep them in sync, I put a very soft set of four key claps sounds of the flute at the very beginning of the piece. The clap sounds were meant to give the performer information about the tempo or metronome 72. (See sample in Fig. 5). Since there were no other metric indications and no click track, I had to be very careful in my composition to not write anything that might cause a major asynchronous event, such as rubato playing or accelerando/ritardando.

I felt the performer was constrained since he had to follow the CD player no matter what. Surely by listening to it over and over, the performer learned the electronic part by heart to the point of identifying a few sounds or signals that he could use as milestones to keep in sync with the CD player. I felt the music was constrained because regardless of the venue in which it was performed, the tempo had to stay the same. It is a fact that musical tempo depends largely on the resonance of the music hall in which the performance takes place: the longer the resonance, the slower the tempo.

The duration of reverberations in Gothic churches, therefore, does not permit excessively rapid modulations and also sets more or less narrow limits on the ability to recognize rapid figures, in spite of the reflections from posts or pillars. The acoustic conditions in these halls are better suited for the slower line sequences of liturgical songs or Gregorian chants. (Meyer & Hansen, 2009)

The musicologist Thurston Dart argues that early composers were very aware of the resonance effects of different performance halls on their music “and that they deliberately shaped their music accordingly” (Dart, 1954). The British architectural theorist and acoustician Hope Bagenal states that without the Reformation, which resulted in reduced reverberation in Lutheran churches, polyphonic music could not have developed beyond a certain point since less resonance led to new possibilities for “more rapid music flow, and true tempo effects in church music” (Bagenal, 1951).I can confirm this from my own experience. One of my pieces, Concert for Violin and orchestra(1986), went from an overall duration of approximately 14 minutes when performed in a concert hall to just over 19 minutes when performed in a large cathedral.

Sononymus for Human Body (2005)

In my work Sononymus for Human Body,[14]I was able for the first time to digitally control sonority and the spatial location of the sound (panning).[15]Employing the DIEM Digital Dance System,[16]I made my first move to use bodily movement as a metaphor. I used environmental sounds from my own recording of a seashore made at one of Iceland’s most magical places, Malarrif on the Snæfellsnes peninsula. Using my own body motion, I was able to control volume by holding my arms tight to my body for minimum volume and then gradually increase the volume by stretching my arms, achieving maximum volume with fully outstretched arms. By moving my arms from left to right, I not only shifted the sound left to right, but I also moved it around the hall using four-channel surround sound. This was a unique experience for me, standing there on stage with Mother Nature literally in my hands and being able to control its volume and its spatial movement.

It was an incredible feeling to be able to exert control with the movement of my body, the sound of the sea waves coming from the back of the hall to the front with increasing velocity, with me making them move to the left and the right, right-to-left and back again with decreasing volume, and so on and so forth. While standing on the stage I felt a kind of God-like sensation of “controlling the forces of nature.” What if a conductor could control electronic music the same way with her body movements?

Experience and Result

Being able to control the volume and spatial location of the sound in real time using the movement of my body was a very promising development in my musical progress. As a composer whose interest lies in interactive media and the classical tradition of composing music on paper, performing Sononymus for Human Body was a unique and encouraging experience. It was encouraging because the interactivity was working and unique because the expression and musical feeling were there. But the composition as written, a physical object was still missing.

The performance was completely improvised; nothing was written down beforehand. A repository of years of musical training was harvested to create music in structured form. That is to say, the structure was unconsciously formed by my years of musical training.

Extended Interactive Composition

The Hljóðs bið ek compositions (2013)

The Hljóðs bið ek composition involved more instruments (voices), making the common challenges associated with real-time polyphonic pitch tracking highly impractical. This therefore called for alternative methods.

Hljóðs bið ek for Voices

The singers form a circle in groups of two around the audience, with a conductor located in the middle of the audience. The voices of each group of two are transferred via computer to a speaker located behind the group and to a speaker located behind the next group to the right. This thus forms a sense of surround. I ended up using a simple, custom-made amplitude envelope follower for analyzing the vocal volume and controlling the electronics in real time.

Experience and Result

Hljóðs bið ek for Voices was premiered at the Rockheim Museum in Trondheim with me conducting. Standing there conducting on a podium in the middle of the audience made the need for conducting and controlling the electronic sound particularly evident. What if I could mix the sonic balance, bring the electronic volume up and down, change its sonority, and move it around the hall, all with my conducting gestures?

Creating a conducting tool such as ConDiS became an urgent goal.

[1]A well-known electronic music composer who wrote the first electronic work commissioned by a record company, Silver Apples of the Moon.

[2]Daughter of the legendary inventor Léon Theremin. His Theremin instrument was one of the first electronic musical instruments and the first to be mass produced.

[3]Pioneer and “grandfather” of Computer Music, known for his GROOVE program, the first fully developed music synthesis system for interactive composition and real-time performance. He later developed the Radio Baton conductor’s baton for controlling a computer orchestra.

[4]My first full-scale multimedia work Goblins from the Land of Ice, composed in 1992. It included live interactive Japanese Butoh dance and video projection.

[5]Professional Composer was among the first commercial music notation programs available on Mac and was produced by MOTU (Mark of the Unicorn) in the 1980s.

[6]The performance took place at the Reykjavik Arts Festival, June 10th, 1988, at Kjarvalsstaðir Museum, performed by Svava Bernhardsdottir on viola, Guðný Guðmundsdottir on piano, and me conducting.

[7]In music, the term “rubato” means playing in free rhythm, which means the musician can bend or swing the rhythm.

[8]Composer-in-residence at the CNMAT (Center for New Music and Audio Technology).

[9]Butoh is a form of contemporary Japanese dance that arose in the period following the Second World War. It is known for its slow pace and awkward body postures.

[10]Harupin-Ha on Facebook: https://www.facebook.com/berkeleybutoh/

[11]Figures from Icelandic folklore, traditionally portrayed as mischievous pranksters but who, in modern times, have increasingly taken on a more benevolent role similar to Santa Claus (Father Christmas). https://en.wikipedia.org/wiki/Yule_Lads

[12]Immediate two-way communication between performer and computer responding to given information.

[13]See YouTube recording at https://youtu.be/fLJx0I0U8wQ

[14]See the YouTube version at http://www.youtube.com/watch?v=Eo0bGunr55s

[15]Distribution of a sound signal in space giving the impression that sound is moving from one side to another.

[16]Gesture controlling system using bending sensors for sending MIDI information. Developed by the Danish Institute of Electronic Music – DIEM.